|

Hanbyel Cho I am currently an AI Robotics Research Scientist at Samsung Electronics, working on AI-driven humanoid whole-body control and locomotion. My research integrates human motion understanding, reinforcement learning, and control theory to enable humanoid robots to learn and adapt from human motion in real-world environments. I earned my PhD in Electrical Engineering from KAIST, where I was advised by Prof. Junmo Kim. My doctoral research focused on capturing and reconstructing human-related subjects—such as body pose and shape—under real-world conditions for practical applications in immersive environments. During my PhD studies, I gained valuable experience through two internships at Meta Reality Labs—one in Pittsburgh, PA, USA (2023), and another in Redmond, WA, USA (2024). I was also honored to be selected as a finalist for the Qualcomm Innovation Fellowship in both 2022 and 2023. If you're interested in collaborating, feel free to reach out! tlrl4658 [at] gmail.com / CV (updated: Feb 2025) / Bio / Scholar / Linkedin |

|

|

|

|

PublicationsMy current research centers on learning-based humanoid whole-body control and locomotion, aiming to enable robots to learn agile and adaptive motion from data. Previously, I studied 3D human reconstruction and motion understanding, which naturally led to my current interest in AI-driven humanoid behavior learning. Below are my recent publications; some papers are highlighted. |

|

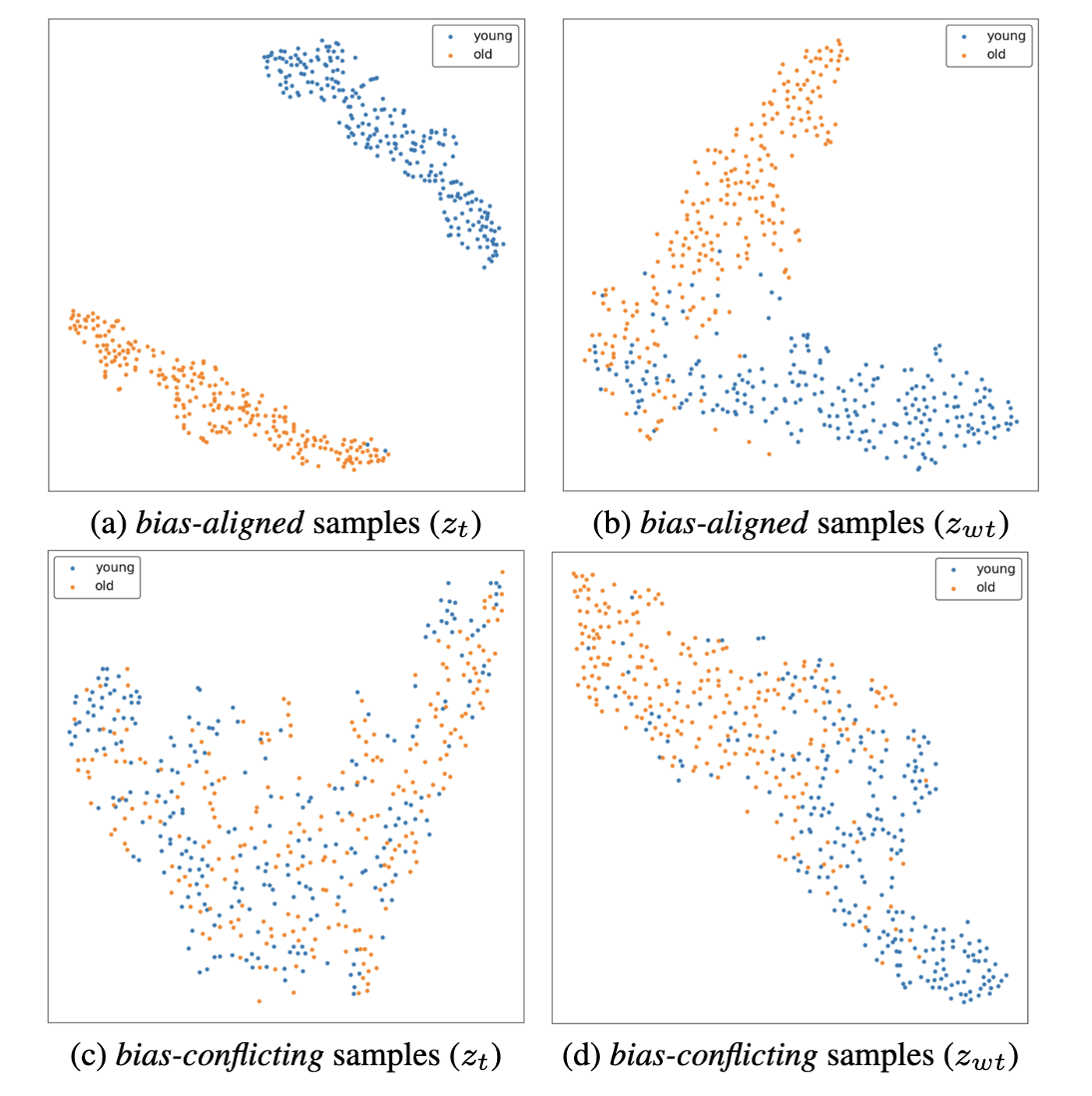

Controllable Feature Whitening for Hyperparameter-Free Bias Mitigation

Yooshin Cho, Hanbyel Cho, Janghyeon Lee, Hyeong Gwon Hong, Jaesung Ahn, Junmo Kim IEEE/CVF International Conference on Computer Vision (ICCV), 2025 arXiv A lightweight and hyperparameter-free debiasing method that whitens feature representations to remove bias, achieving fairness and utility trade-off without adversarial learning. |

|

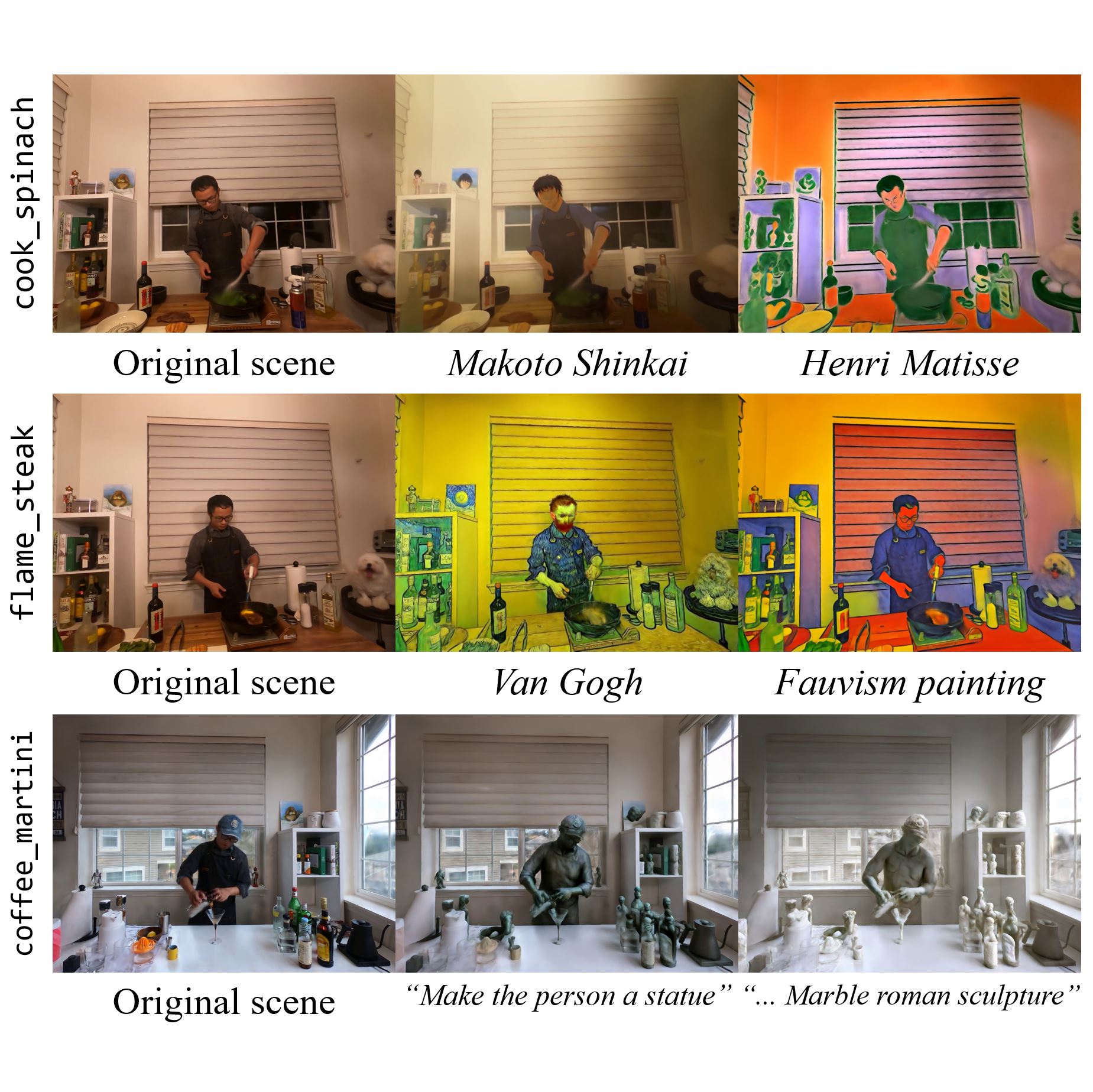

Efficient Dynamic Scene Editing via 4D Gaussian-based Static-Dynamic Separation

Joohyun Kwon*, Hanbyel Cho*, Junmo Kim (*Equal contribution) IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025 project page / arXiv Efficient 4D dynamic scene editing method using 4D Gaussian Splatting, focusing on static 3D Gaussians and score distillation refinement to achieve faster, high-quality edits. |

|

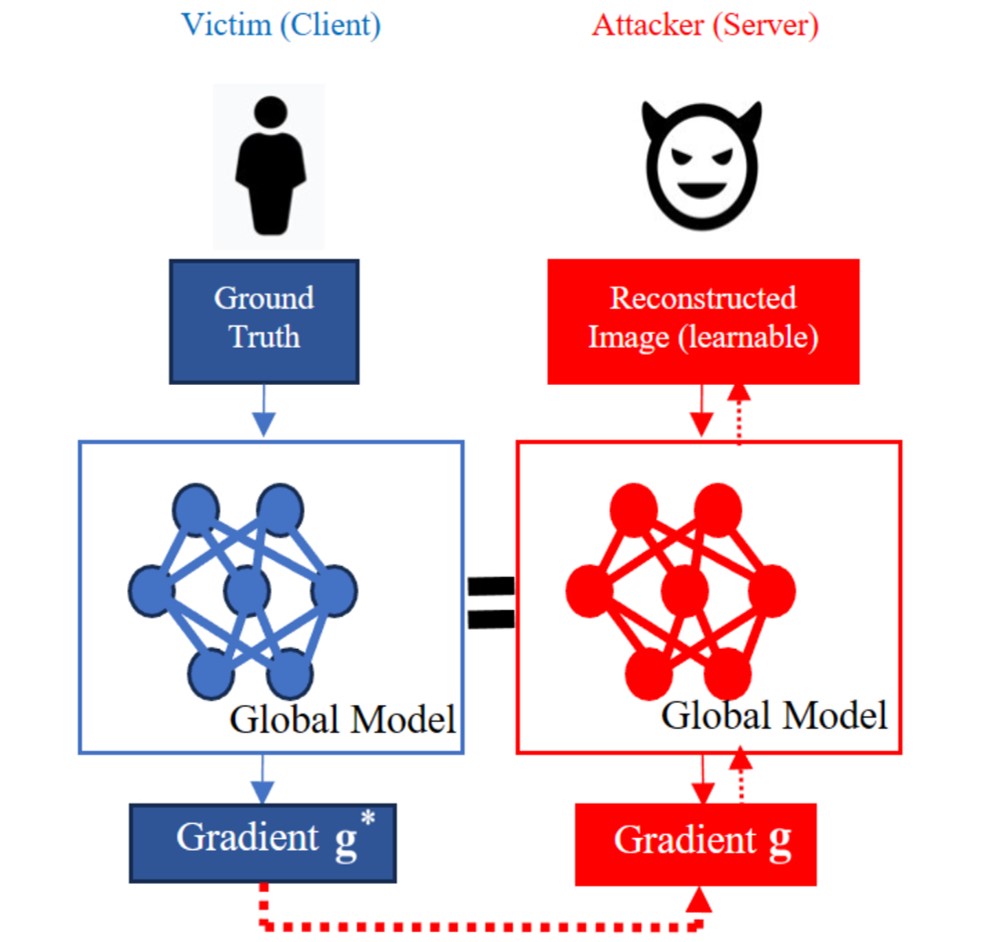

Foreseeing Reconstruction Quality of Gradient Inversion: An Optimization Perspective

Hyeong Gwon Hong, Yooshin Cho, Hanbyel Cho, Jaesung Ahn, Junmo Kim The 38th Annual AAAI Conference on Artificial Intelligence (AAAI), 2024 arXiv Proposes a novel loss-aware vulnerability proxy (LAVP) for gradient inversion attacks in federated learning, using the maximum or minimum eigenvalue of the Hessian to capture sample vulnerabilities beyond traditional gradient norm. |

|

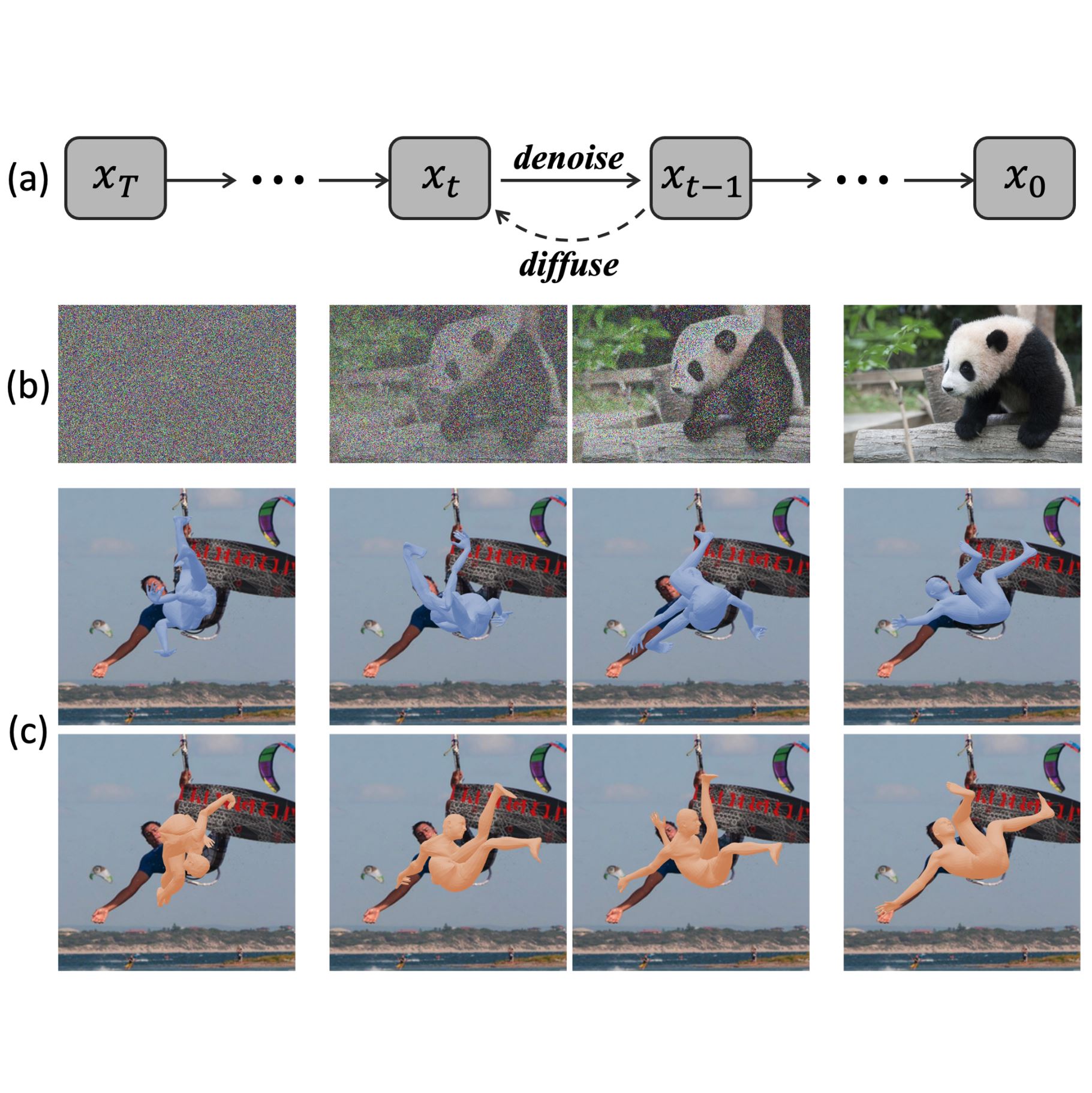

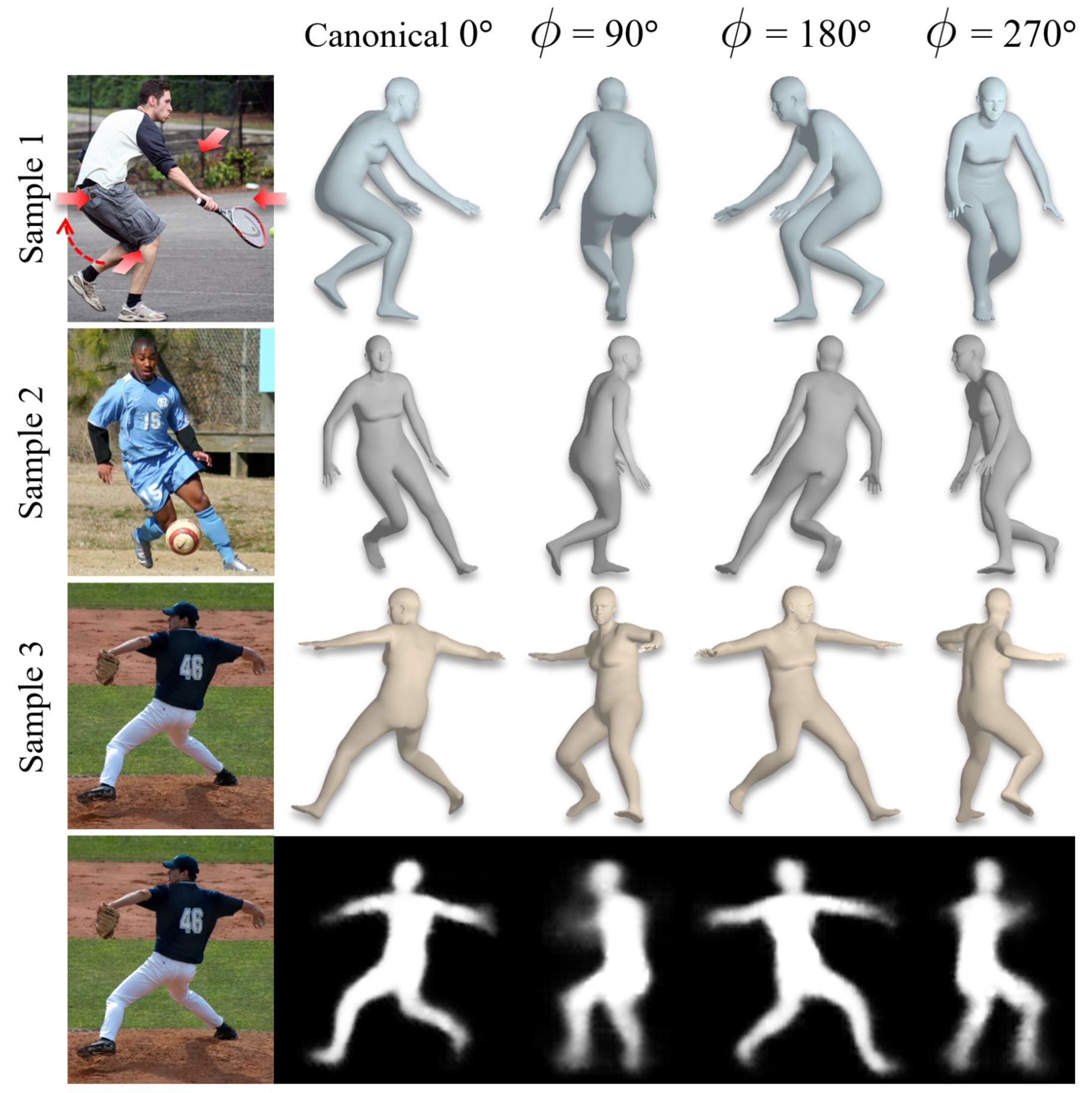

Generative Approach for Probabilistic Human Mesh Recovery using Diffusion Models

Hanbyel Cho, Junmo Kim IEEE/CVF International Conference on Computer Vision (ICCV), 2023, CV4Metaverse Workshop arXiv Proposes a novel generative framework for 3D human mesh recovery, leveraging denoising diffusion to model multiple plausible outcomes and address the inherent ambiguity in the task. |

|

Implicit 3D Human Mesh Recovery using Consistency with Pose and Shape from Unseen-view

Hanbyel Cho, Yooshin Cho, Jaesung Ahn, Junmo Kim IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 arXiv Leveraging neural feature fields to render multi-view feature maps and enforcing cross-view consistency enables accurate 3D human mesh recovery from a single image. |

|

Video Inference for Human Mesh Recovery with Vision Transformer

Hanbyel Cho, Jaesung Ahn, Yooshin Cho, Junmo Kim IEEE International Conference on Automatic Face and Gesture Recognition (IEEE FG), 2023 arXiv Replacing naive GRU-based modeling with a Vision Transformer and a learnable Channel Rearranging Matrix reduces motion fragmentation, boosting human mesh recovery accuracy, robustness, and efficiency. |

|

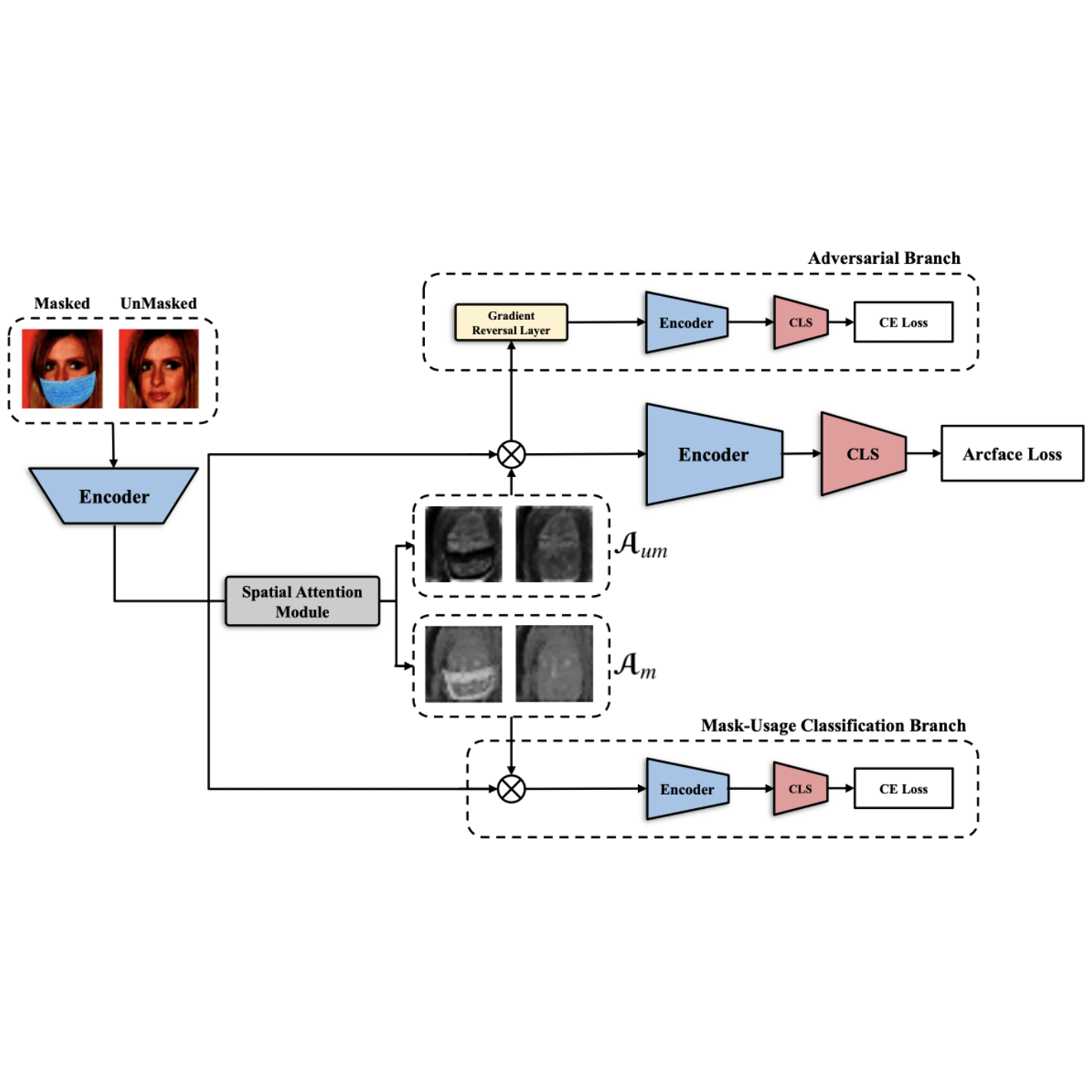

Localization using Multi-Focal Spatial Attention for Masked Face Recognition

Yooshin Cho, Hanbyel Cho, Hyeong Gwon Hong, Jaesung Ahn, Dongmin Cho, Junmo Kim IEEE International Conference on Automatic Face and Gesture Recognition (IEEE FG), 2023 arXiv Masked face recognition approach that leverages multi-focal spatial attention to precisely isolate unmasked features. |

|

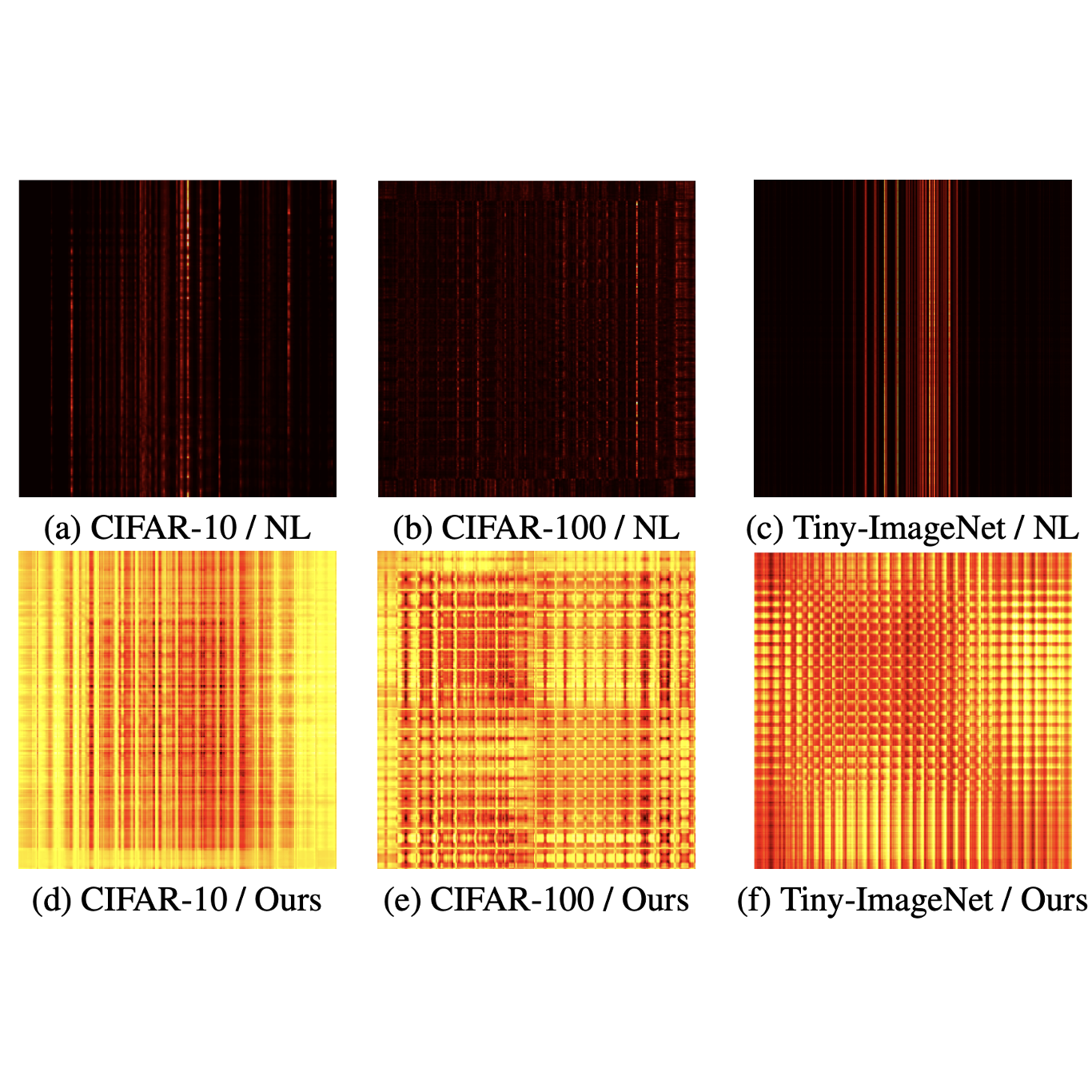

Rethinking Efficacy of Softmax for Lightweight Non-Local Neural Networks

Yooshin Cho, Youngsoo Kim, Hanbyel Cho, Jaesung Ahn, Hyeong Gwon Hong, Junmo Kim IEEE International Conference in Image Processing (ICIP), 2022 arXiv Replacing softmax in non-local blocks with a simple scaling factor mitigates its over-reliance on vector magnitude, thereby improving performance, robustness, and efficiency. |

|

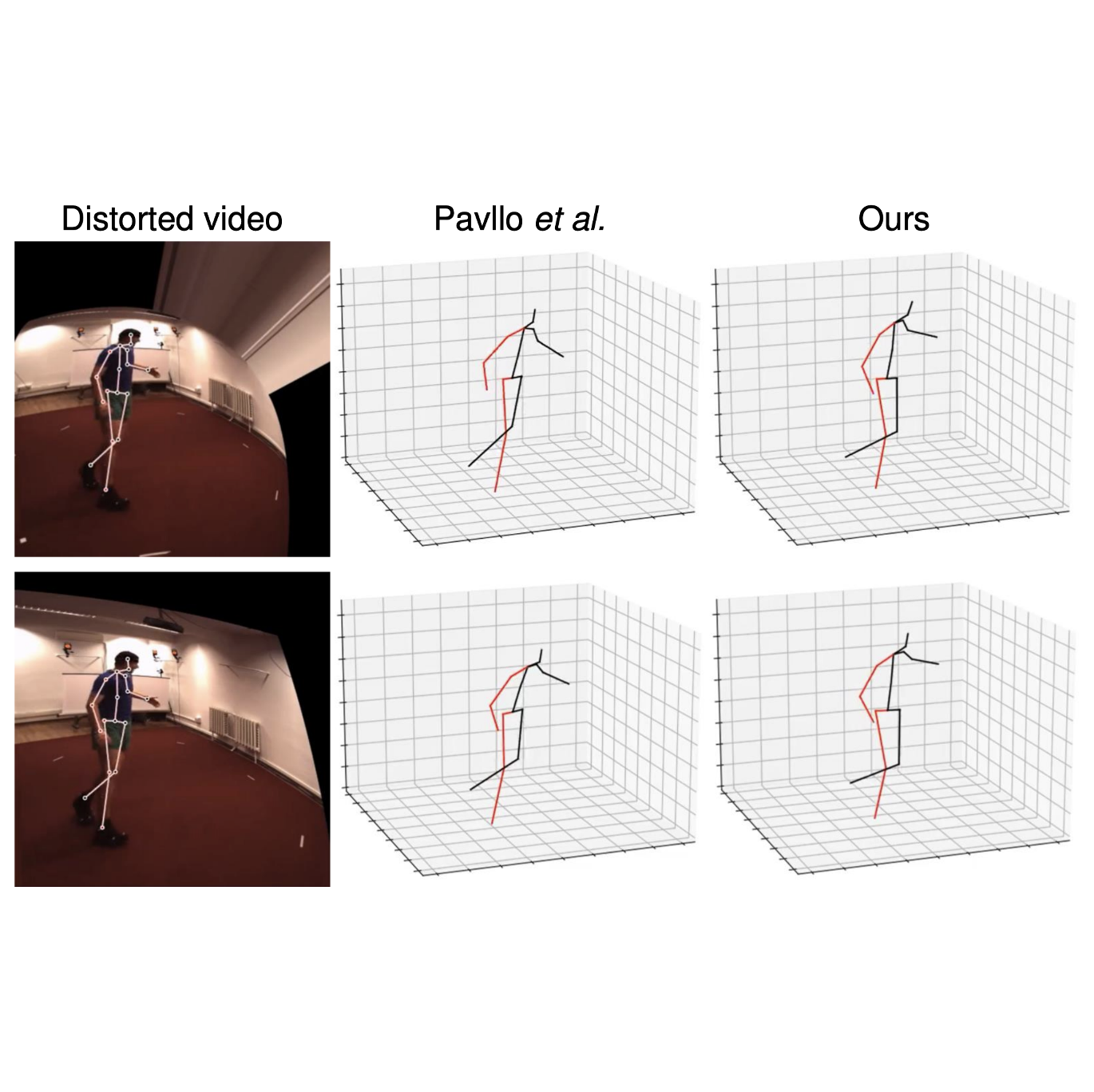

Camera Distortion-aware 3D Human Pose Estimation in Video with Optimization-based Meta-Learning

Hanbyel Cho, Yooshin Cho, Jaemyung Yu, Junmo Kim IEEE/CVF International Conference on Computer Vision (ICCV), 2021 arXiv 3D human pose estimation model that leverages MAML and synthetic distorted data to rapidly adapt to various camera distortions without requiring calibration. |

|

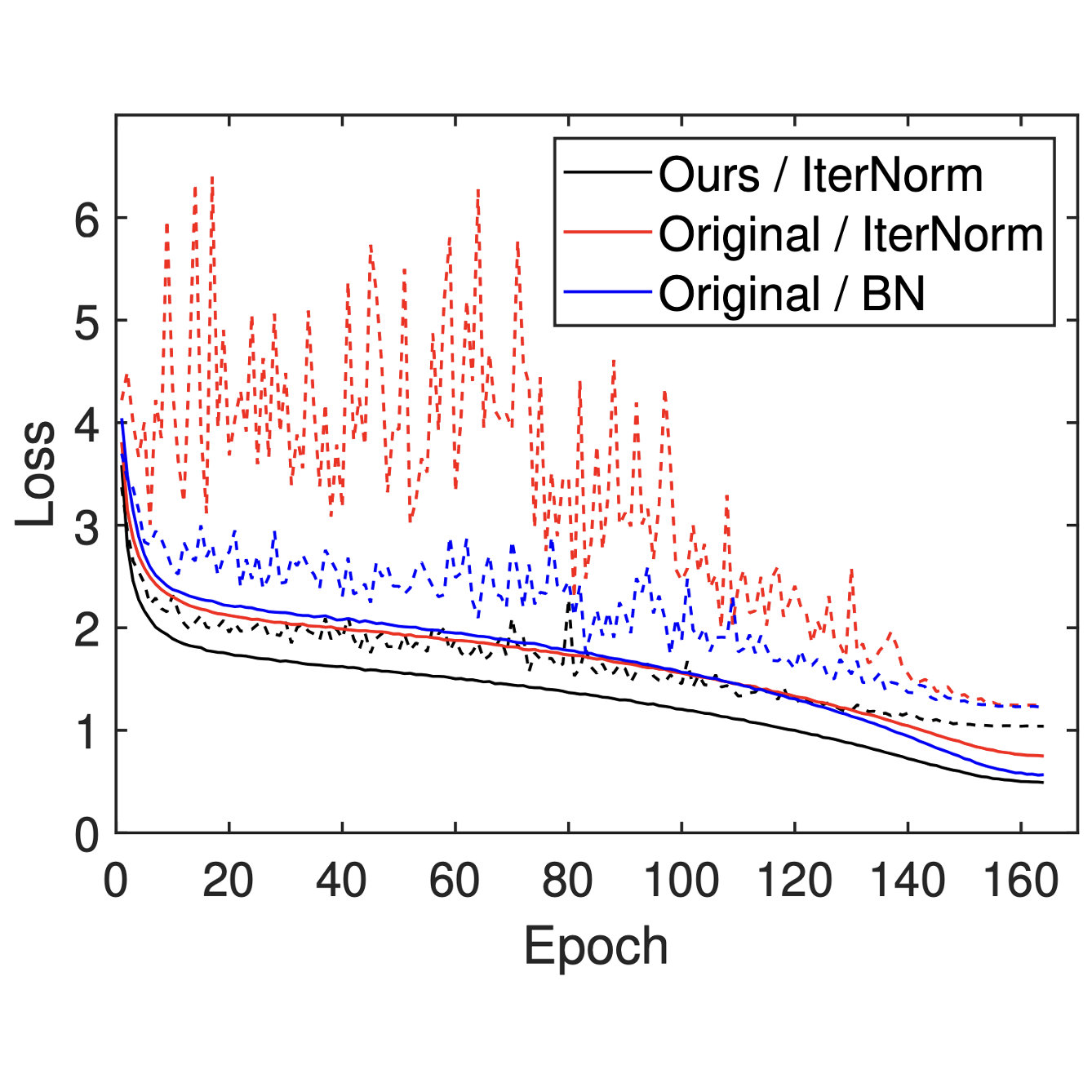

Improving Generalization of Batch Whitening by Convolutional Unit Optimization

Yooshin Cho, Hanbyel Cho, Youngsoo Kim, Junmo Kim IEEE/CVF International Conference on Computer Vision (ICCV), 2021 arXiv Introduces a novel Convolutional Unit that aligns whitening theory with convolutional architectures, significantly boosting the stability and performance of Batch Whitening. |

|

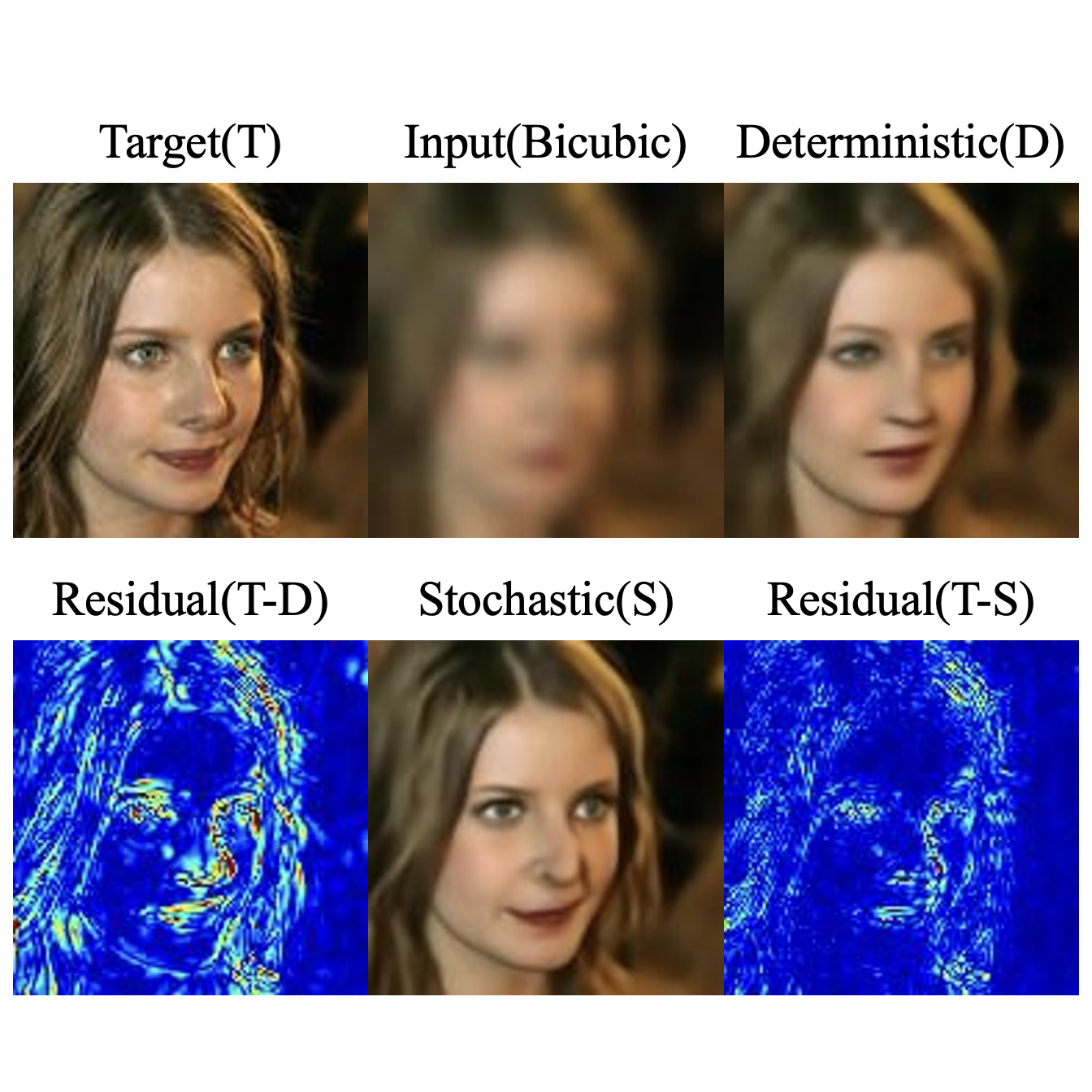

Stochastic Attribute Modeling for Face Super-Resolution

Hanbyel Cho, Yekang Lee, Jaemyung Yu, Junmo Kim arXiv, 2020 arXiv Stochastic modeling-based face super-resolution method that separates deterministic and stochastic attributes to reduce uncertainty and improve reconstruction quality. |